LGPL

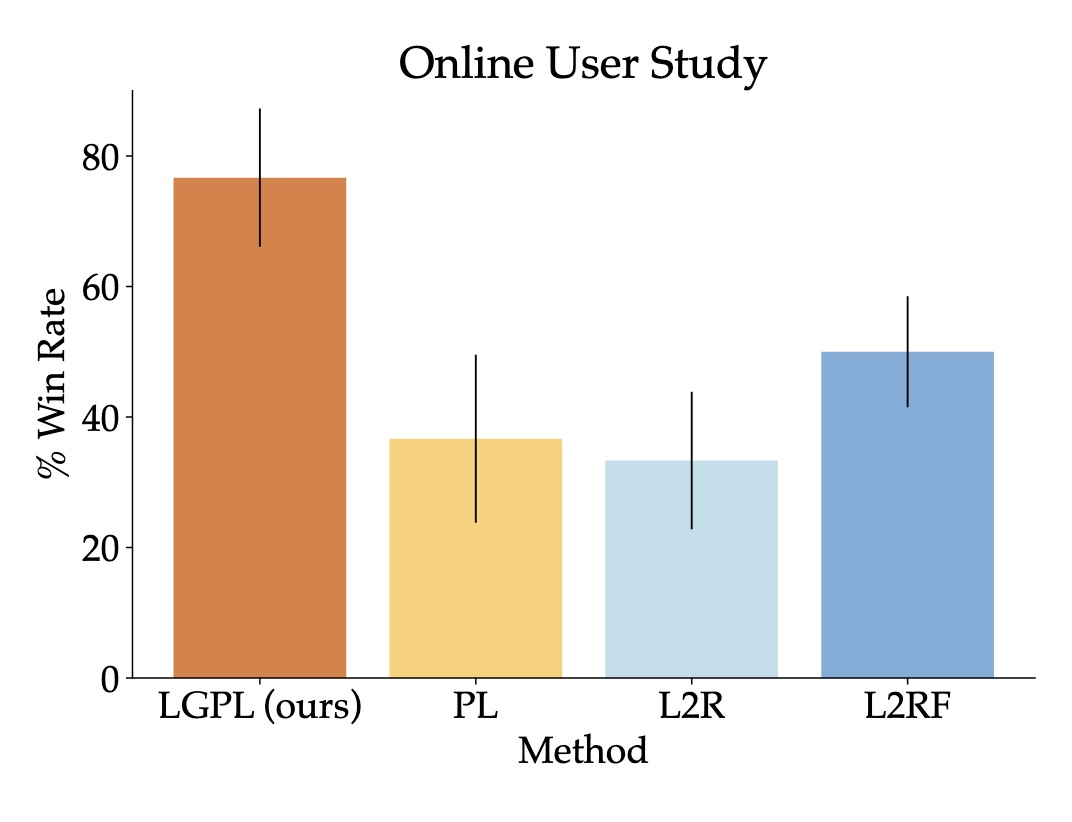

LGPL generates an accurate, human-aligned gait for the scared behavior. We found that LGPL tasks were preferred over competing methods 75.83 ± 5.14% of the time by users in a video survey.

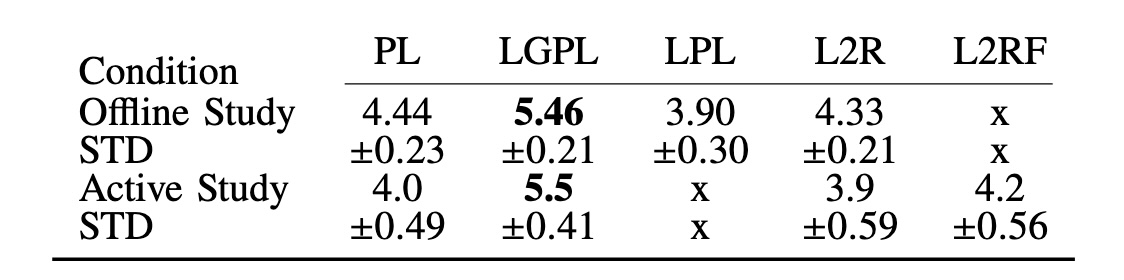

Expressive robotic behavior is essential for the widespread acceptance of robots in social environments. Recent advancements in learned legged locomotion controllers have enabled more dynamic and versatile robot behaviors. However, determining the optimal behavior for interactions with different users across varied scenarios remains a challenge. Current methods either rely on natural language input, which is efficient but low-resolution, or learn from human preferences, which, although high-resolution, is sample inefficient. This paper introduces a novel approach that leverages priors generated by pre-trained LLMs alongside the precision of preference learning. Our method, termed Language-Guided Preference Learning (LGPL), uses LLMs to generate initial behavior samples, which are then refined through preference-based feedback to learn behaviors that closely align with human expectations. Our core insight is that LLMs can guide the sampling process for preference learning, leading to a substantial improvement in sample efficiency. We demonstrate that LGPL can quickly learn accurate and expressive behaviors with as few as four queries, outperforming both purely language-parameterized models and traditional preference learning approaches.

"Act excited"

"Act scared"

"Act happy"

"Act sad"

"Act angry"

LGPL generates an accurate, human-aligned gait for the scared behavior. We found that LGPL tasks were preferred over competing methods 75.83 ± 5.14% of the time by users in a video survey.

After receiving semantic feedback, L2RF generates an innaccurate gait due to low-quality feedback from a non-expert user. One round of language feedback only improved behaviors 40 percent of the time.

We found that LGPL was preferred 76.67 ± 10.59% of the compared to baseline methods in an active user study.

You are a dog foot contact pattern expert.

Your job is to give a velocity, pitch, and a foot contact pattern based on the input.

You will always give the output in the correct format no matter what the input is.

More about Pupper via CS 123 .

@article{clark2024lgpl,

author = {Clark, Jaden and Hejna, Joey and Sadigh, Dorsa},

title = {Efficiently Generating Expressive Quadruped Behaviors via Language-Guided Preference Learning},

journal = {preprint},

year = {2024},

}